The application of machine vision in industrial automation systems has a certain history, it replaces the traditional manual inspection, which improves the production quality and output. We have seen the rapid spread of cameras in everyday devices such as computers, mobile devices and automobiles, but the biggest advancement in machine vision is processing power. As processor performance continues to double every two years, and parallel processing technologies such as multicore CPUs and FPGAs are gaining increasing attention, vision system designers can now apply sophisticated algorithms to visualize data and create smarter system.

Increased performance means designers can achieve higher data throughput for faster image acquisition, higher resolution sensors, and take advantage of some of the new cameras on the market with the highest dynamic range. Improved performance not only allows designers to capture images faster, but also processes images faster. Preprocessing algorithms (such as thresholds and filtering) or processing algorithms (such as pattern matching) can also be performed more quickly. The final designer is able to make decisions based on visual data faster than ever before.

Brandon Treece, director of data acquisition and control products at NI Headquarters in Austin, Texas, who is responsible for machine vision, believes that as vision systems increasingly integrate the latest generation of multi-core CPUs and powerful FPGAs, vision system designers need to understand the use of these processing components. The benefits and gains and losses. Not only do they need to run the right algorithms on the right hardware, they also need to understand which architectures are best suited as the basis for their design.

Inline processing and co-processing

Before deciding which type of algorithm is best for which processing element, you should know the type of architecture that is best for each application. There are two main use cases to consider when developing a vision system based on a heterogeneous architecture of CPU and FPGA: embedded processing and co-processing.

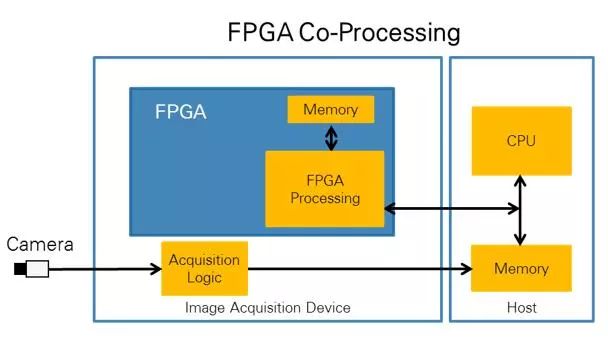

If it is FPGA co-processing, the FPGA and CPU will work together to share the processing load. This architecture is most commonly used on GigE Vision and USB3 Vision cameras because their acquisition logic is best implemented on the CPU:

You can use the CPU to capture images and then send them to the FPGA via direct memory access (DMA) so that the FPGA can perform operations such as filtering or color plane extraction. You can then send the image back to the CPU for more advanced operations such as optical character recognition (OCR) or pattern matching.

In some cases, you can implement all the processing steps on the FPGA and only send the processing results back to the CPU. This allows the CPU to use more resources for other operations such as motion control, network communication, and image display.

Figure 1. In FPGA coprocessing, images are acquired by the CPU, sent to the FPGA via DMA, and then processed by the FPGA.

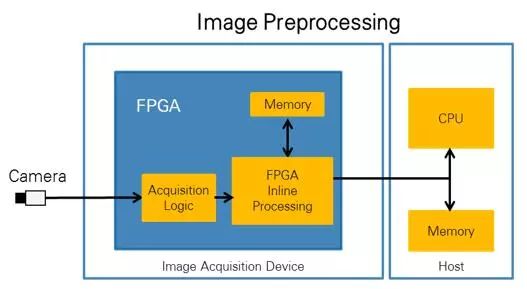

In an embedded FPGA processing architecture, you can connect the camera interface directly to the pins of the FPGA so that pixels can be sent directly from the camera to the FPGA. This architecture is often used with Camera Link cameras because their acquisition logic is easy to implement using digital circuitry on the FPGA. This architecture has two main benefits:

First, as with co-processing, when performing pre-processing on an FPGA, you can use embedded processing to move part of the work from the CPU to the FPGA. For example, high speed pre-processing, such as filtering or thresholding, can be performed on the FPGA before the pixels are sent to the CPU. This also reduces the amount of data the CPU has to process because the logic on the CPU only needs to capture the pixels of the region of interest, which ultimately increases the throughput of the entire system.

The second benefit of this architecture is the ability to perform high-speed control operations directly within the FPGA without the use of a CPU. FPGAs are ideal for control applications because they provide very fast and highly deterministic cycle rates. An example of this is high-speed classification, where the FPGA sends pulses to the actuator, and when the pulse passes through the actuator, the actuator rejects or sorts the part.

Figure 2. In an embedded FPGA processing architecture, you can connect the camera interface directly to the pins of the FPGA so that pixels can be sent directly from the camera to the FPGA.

CPU and FPGA vision algorithms

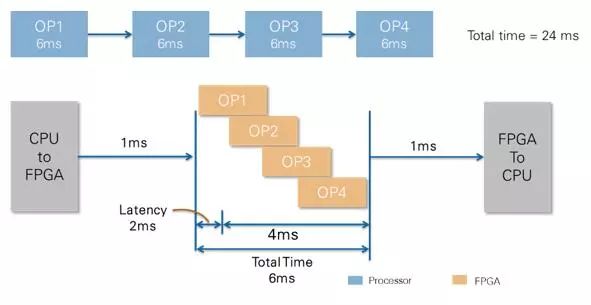

With a basic understanding of the different ways to build a heterogeneous vision system, you can look at the best algorithms that run on an FPGA. First you need to understand how the CPU and FPGA work. To explain this concept, let's assume that a theoretical algorithm can perform four different operations on an image, and then look at how the four operations operate when deployed to the CPU and FPGA:

The CPU performs the operations in order, so the first operation must be run on the entire image before the second operation can be started. In this example, it is assumed that each step in the algorithm takes 6 ms to run on the CPU; therefore, the total processing time is 24 ms.

Now consider running the same algorithm on the FPGA. Since FPGAs are essentially massively parallel, four operations in the algorithm can operate on different pixels in the image simultaneously. This means that it takes only 2ms to receive the first processed pixel, and it takes 4ms to process the entire image, so the total processing time is 6ms. This is much faster than the CPU.

Even with the FPGA co-processing architecture and transferring images to the CPU, the overall processing time (including transmission time) is much shorter than using the CPU alone.

Figure 3. Because FPGAs are massively parallel in nature, they deliver significant performance improvements over CPUs.

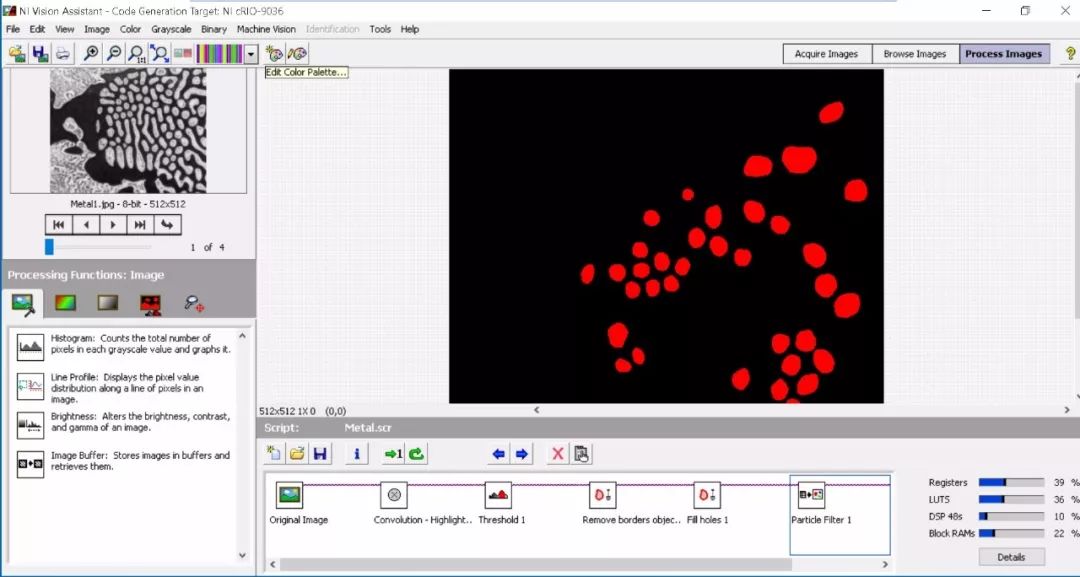

Now consider a real-world example, such as the image required for particle counting.

First you need to apply a convolution filter to sharpen the image.

Next, the image is run through the threshold to generate a binary image. This not only reduces the amount of data in the image by converting it from 8-bit monochrome to binary, but also prepares images for binary morphology applications.

The final step is to use morphology to apply the shutdown feature. This will remove any holes in the binary particle.

If the above algorithm is only executed on the CPU, the convolution step of the entire image must be completed before the threshold step begins. When using NI's Vision Development Module for LabVIEW and the cRIO-9068 CompactRIO controller based on the Xilinx Zynq-7020 Fully Programmable SoC, the time required to execute the above algorithm is 166.7ms.

However, if you run the same algorithm on the FPGA, you can execute each step in parallel. Running the same algorithm on the FPGA takes just 8ms. Remember that the 8ms time includes the DMA transfer time to send the image from the CPU to the FPGA, and the time the algorithm was completed. In some applications, it may be necessary to send the processed image back to the CPU for use by other parts of the supply. If you add this time, the whole process takes only 8.5ms. In general, FPGAs execute this algorithm 20 times faster than the CPU.

Figure 4: Running a visual algorithm using the FPGA co-processing architecture, performance is up to 20 times better than running the same algorithm with only the CPU.

So why not run each algorithm on the FPGA?

Although FPGAs are more beneficial to visual processing than CPUs, there are certain trade-offs to enjoy these advantages. For example, consider the raw clock frequency of the CPU and FPGA. The clock frequency of the FPGA is on the order of 100~200MHz. Obviously, the FPGA clock frequency is lower than the CPU clock frequency, and the CPU can easily run at 3GHz or higher. Therefore, if an application requires an image processing algorithm that must be iterated, and the parallelism of the FPGA cannot be utilized, the CPU can process faster.

The example algorithm discussed earlier can run up to 20 times faster on an FPGA. Each processing step in the algorithm operates on each pixel or group of pixels simultaneously, so the algorithm can take advantage of the parallel advantages of the FPGA to process the image. However, if the algorithm uses processing steps such as pattern matching and OCR, these requirements immediately analyze the entire image, and the advantages of FPGAs are much stronger. This is due to the lack of parallelization of processing steps and the need for large amounts of memory for alignment analysis between images and templates. Although FPGAs have direct access to internal and external memory, in general, the amount of memory available to the FPGA is far less than the amount of CPU available, or the amount of processing required for these operations.

Overcome programming complexity

The advantages of FPGAs for image processing depend on each application requirement, including the application's specific algorithms, latency or jitter requirements, I/O synchronization, and power consumption. The architecture with FPGA and CPU is often used to take advantage of the respective advantages of FPGAs and CPUs, and to have a competitive advantage in terms of performance, cost and reliability. However, one of the biggest challenges in implementing FPGA-based vision systems is overcoming the programming complexity of FPGAs. Visual algorithm development is essentially an iterative process. You must try a variety of methods to complete any task. In most cases, it is not determined which method is feasible, but which method is the best, and the "best method" is determined by the application. For example, for some applications, speed is critical; for others, accuracy is more important. At the very least, there are several different ways to try to find the best way for a particular application.

In order to maximize productivity, no matter which processing platform is used, feedback and benchmark information about the algorithm needs to be obtained immediately. When using an iterative exploratory approach, real-time viewing of algorithm results will save a lot of time. What is the correct threshold? How large or small is the particle removed by the binary morphological filter? Which image preprocessing algorithm and algorithm parameters can best clean up the image? These are common problems when developing visual algorithms, and the key is whether you can change and quickly view the results. However, traditional approaches to FPGA development may slow down innovation because compile time is required between each design change in the algorithm. One way to overcome this is to use an algorithm development tool that allows you to develop CPU and FPGA in the same environment without getting into trouble with FPGA compilation. NI Vision Assistant is an algorithmic engineering tool for developing algorithms that are deployed to a CPU or FPGA to help you simplify your vision system design. You can also use Vision Assistant to compile and run pre-test algorithms on target hardware while easily accessing throughput and resource utilization information.

Figure 5. Using a configuration-based tool development algorithm on FPGA hardware with integrated benchmarks reduces development time by waiting for code compilation time.

So when considering who is more suitable for image processing, is the CPU or FPGA? The answer is "depending on the situation." You need to understand the goals of your application in order to use the processing components that best fit the design. However, regardless of the application, the CPU or FPGA-based architecture and its inherent advantages can improve the performance of machine vision applications by one level.

60V Battery Pack ,Battery Pack With Outlet,Back Up Battery Pack,Ev Battery Pack

Zhejiang Casnovo Materials Co., Ltd. , https://www.casnovo-new-energy.com