On April 23, 2008, Apple took great risks to smear the first generation of iPhone, and spent $278 million on the acquisition of PA Semi, which focuses on the development of high-performance Power processors, to form its processor R&D team. The backbone, then released in September 2012, the iPhone 5, the heart of the "A6" processor, finally no longer uses the ARM-licensed core, using its own "Swift" micro-architecture (Micro Architecture).

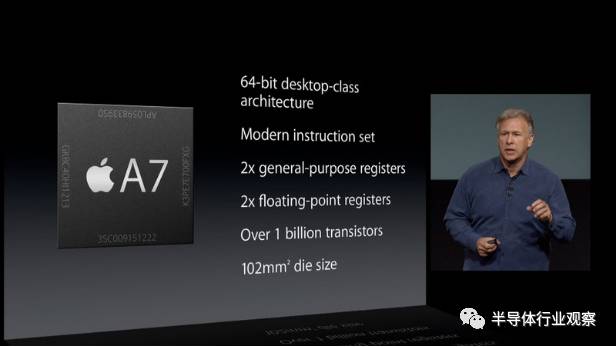

Starting with the world's first 64-bit ARM processor "A7" (Cyclone microarchitecture) for smartphones and tablets, Apple's own SoC began to showcase overwhelming the ARM Cortex family (with Qualcomm since lying in the gun) There are core) performance advantages, and as time goes by, the gap is getting more and more open.

Then, after each generation of iPhone is published, the reports of major technology media websites and the readers underneath will only have two types of single-cell biological reactions:

The article hangs high. "Everyone is shocked. Even people who develop performance testing software don't know what's going on." The content-like farm title, and then continue to mechanized hype "Can Apple replace Intel with its own chip? Years of cold food.

Under the reader's message, the chicken and the ducks clashed together, staged "Android Liberalism" and "Apple Theocracy" believers, no one talked about any noteworthy points, even the slightest academic elements are not, the religious belief is so wonderful .

The author is not embarrassed, directly speaking here to conclude:

By firmly controlling the "closed" nature of the hardware and software platform, Apple has grasped the opportunity brought by the ARM instruction set to 64-bit, creating a series of advanced micro-architectures that can effectively process more instructions at the same time.

Does it seem to be too big to be close to nonsense? If you really think so, then you have more to continue reading.

Bringing out chaos: "instruction set architecture" as a computer language vs. "processor core microarchitecture" for executing language vehicles

In recent years, thanks to ARM's authorized IP business model, more and more people don't understand the difference between the two. They have been completely mixed together. Over the years, I have heard too many jokes that people can't laugh at all. I repeat it here.

What is the matter of ARM that dominates the smart phone market? Taking the 32-bit ARMv7-A instruction set as an example, the micro-architecture (core) common on mobile phones has a total of:

ARM sells IP to other people's Cortex-A5 / A7 / A9 / A12 / A15 / A17 core micro-architecture.

Qualcomm's own Scorpio / Krait.

After Apple acquired PA Semi, it closed the door to come up with the A6 "Swift", the heart of the iPhone 5.

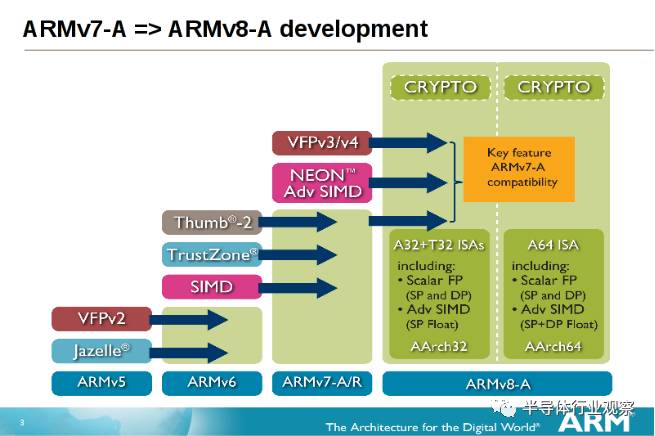

Switching to 64-bit ARMv8-A turns into the following scenario:

ARM sells IP to other people's Cortex-A35 / A53 / A57 / A72 / A73 core micro-architecture.

ARMv8.2-A instruction set with half-precision floating-point support and system reliability: Cortex-A55 / A75.

Qualcomm's own Kyro.

nVidia's Project Denver.

Apple continues to close the door to create the A7 "Cyclone", and the following chips, such as A8 "Typhoon", A9 "Twister", A10 "Fusion" (Hurricane + Zephyr), A11 "Bionic" (Monsoon + Mistra) .

Special microarchitecture tailored for the server, such as the Qualcomm Centriq 2400 "Falkor", and the core of the Cavium Thunder X series.

As long as the operating systems are the same (like the version Android or iOS), these core microarchitectures should correctly execute software written or compiled using the ARM instruction set, which is more professional or more awkward, they have the same application binary executable file. The interface (ABI, Application Binary Interface), like Intel and AMD's x86 processors, should properly install the Windows operating system, and of course implement applications such as Office and package games such as Battlefield.

As for Qualcomm, Apple and nVidia, whether they have their own special needs and define their own "unofficial" ARM instructions, then they will have time to discuss them later.

High-performance road: let the core micro-architecture handle more instructions in the same time

On the premise of executing the same instruction set "language", the performance of the compatible processor must be able to win, and only the micro-architectural design can effectively process more instructions than the competition. The direction is nothing more than:

Higher clocks: Although often the computer theory will teach you "the more instruction pipeline stages, on behalf of the processor to execute more instructions at the same time, but these instruction bits are at different stages", but in fact, at most one clock cycle" Spoken "an instruction that has been executed, so the "deepening the command pipeline" at the actual level is actually the same thing as "improving the running clock." Unfortunately, smart phones are more unrealistically oriented by aggressively pursuing high clocks due to strict power consumption constraints.

Wider pipeline: One lunch is not enough, you can eat the second one. If you don't see enough instructions, you can also execute the second one. This is the "Superscalar" architecture from the 1966 CDC6600. . In addition, there is also a pure software way, let the compiler go to a radish and plug a command to the "Extra Long Instruction Set" (VLIW) of different execution units, which is beyond the scope of this article.

A high-performance memory subsystem that is sufficient to feed the execution unit: the system main memory, the cache memory, the bus that connects multiple processor cores, and the Cache Coherence Protocol. Even the non-sequential access memory address (Memory Disambiguation) is an indispensable "infrastructure."

There is no white lunch under the sun: two damn "dependencies"

However, "instruction pipelined" and "parallelization of instruction execution" have also brought new challenges.

Control Dependency: The most important feature of a computer different from a calculator is its ability to "conditionally judge". Different instructions are executed according to different conditions. If the processor encounters a branch or a jump, it must be changed. The execution of the instruction clears the instruction that has entered the pipeline, retrieves the instruction from another memory address, and re-executes and accesses the related data (or "operating element", meaning the target of the operation, such as temporary storage of specific data. The device or the memory address), and the branch with the conditional judgment, causes more damage, because the pipeline needs to stop to wait for the result, or to predict in advance and "first play".

Solution: Branch Prediction, and "Conditional Execution", which will be mentioned later, to reduce the branch instruction.

Data Dependency: When multiple instructions are executed at the same time, it is most difficult to encounter the same data buffer and memory address at the same time, especially when the instruction set defines the number of operational data registers that can be operated, the software means can The less space you try to exclude, the higher the chances of occurrence.

Solution: Out-Of-Order Execution centered on the register rename mechanism (Register Rename).

Then, based on the branch prediction result, the "preulative execution instruction" (Speculative Execution) is used as a combination of branch prediction and non-sequential instruction execution. In short, we do our best to make the pipeline "smooth" like the production line running continuously, achieving the highest instruction execution efficiency.

Of course, the deeper the command pipeline, the more the cost of resuming the pipeline and restoring the execution of the instructions, the more horrible it is to burn the skyscrapers. This is also the main reason why high-speed pipelines are not popular in recent years. Because real-world applications have a lot of unpredictable branching behaviors, the higher the "cost", the more "performance/power" ratio.

Mysterious message revealed by LLVM development environment parameters

With so many principles in mind, Apple never discloses the technical details of its own microarchitecture. How do you master their design direction for high performance?

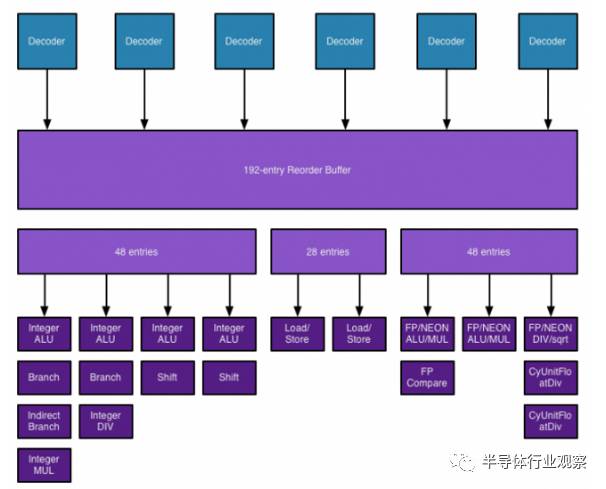

At the beginning of 2014, when most people were "stunned" by A7's 64-bit and amazing performance, some people noticed that the LLVM source code submitted by Apple not only revealed that the micro-architecture code is "Cyclone", but also contains many important specifications. parameter:

Issue Width: 6

Non-sequential instruction execution buffer (Reorder Buffer): 192

Memory Load Delay (Load Latency): 4

Branch prediction error cost (16) (generally between 14 and 19)

At the time, this was a very amazing specification, even if it was today, it was equally horrible, and the instructions that could be processed simultaneously were twice as large as the ARM core at the same time (even if the 64-bit Cortex-A57 had only 3 instructions). The "depth" of the non-sequential instruction execution engine is the Intel Haswell level, and the depth of the command pipeline is maintained at the general level of the 16th order.

I believe that some readers have already read relevant reports from other websites, but it is worth noting that there is a "communication of rivers and lakes": some programmers who develop iOS applications have done some experiments on command output rate and realized that "A7 once executed 32. The bit code, the command output rate is smashed." This "feature" continues all the way to A10 until A11 does not have a 32-bit application executable at all.

Later, of course, Apple did not continue to "size the big release", the specification table of the Apple processor on the wiki, all the way from A7 to A11, are to maintain these figures, it is not known whether there is a test of the actual test, anyway, everyone will be infinite loop Continue to be shocked, Apple has consistently opened the gap with other competitors.

The only certainty is that on my iPad Pro 9.7 å‹A10X, by pairing simple commands, I can output "4 integers, 2 floating points, 2 memory loads" for each clock cycle. Terrible performance. In addition, A10X and A11 abandoned the third-order 4MB cache memory, and replaced it with a large-scale second-order 8MB. It also hinted that Apple is likely to have a major breakthrough in cache memory technology, which can balance high capacity with low latency. .

A11? I don't have an iPhone 8 or iPhone X available, so I have the chance to test it again.

Let us redraw the humanoid chalk circle on the murder scene and summarize Apple's product design orientation:

The microarchitecture is designed with priority in 64-bit performance.

Since mobile processors are subject to low-power requirements, it is difficult to pursue performance by improving the clock, and simply win with a "wider" command pipeline.

Executing more instructions at the same time means that more effort is needed to resolve the issue of register dependencies.

A more powerful non-sequential instruction execution engine.

It is hoped that the instruction set itself will define more data registers to reduce the chance of "strong touch".

Major reforms brought by the ARM instruction set to 64-bit

Let ARM ARM instruction set move to 64-bit ARMv8-A, not only "extend the integer logical register width to 64 bits" and "provide 64-bit memory address space" is so simple, abandon the former focus on embedded The heritage of the application, more concise and elegant, is more conducive to creating a high-performance micro-architecture, and leading ARM to a paradise of high performance. It is the most sacred and inviolable mission of the revision of this instruction set.

ARMv8-A has a lot of revision projects, but from the perspective of the author, in addition to canceling the "Context Switch related mechanism for accelerating the reconstruction of the storage CPU state" (a bunch of technologies that are really small in today's view), and simplifying the exception handling and execution privilege level The most significant reforms, only two:

Multiplying the number of general-purpose registers (GPRs), which happened when AMD moved x86 to 64-bit, was significant.

Cancel the "Conditional Execution" covering the entire set of instructions, which is in line with the former, because the final instruction code space is squeezed out to increase the number of registers.

The latter, also known as "Predicated Execution" (or Guarded Exectuion), aims to reduce the number of branches in the program. The instruction set provides simple and simple conditional execution instructions, doing everything at once.

The direct example is faster. Originally a simple If-Then-Else sequential condition judgment, you will need to wait for the confirmation condition result, or forcibly branch prediction, the pipeline will continue to operate:

If condition

Then do this

Else

Do that

It becomes like this:

(condition) do this

(not condition) do that

Do you feel more succinct? The key point of the lecture is that the central spirit of conditional execution is "translating control dependence into data dependence."

Then, given the past applications, 60% of the If-Then-Else conditional judgments are data movement instructions, which is why the instruction set "afterwards" extended conditional execution functions, such as DEC Alpha, MIPS, and even X86 is based on "Conditional Move".

Taking Alpha as an example, its instruction format is unified to cmovxx (xx stands for condition), a simple conditional move:

Beq ra, label // if (ra) = 0, branch to 'label'

Or rb, rb, rc // else move (rb) into rc

It can be simplified by the new instructions as follows:

Cmovne ra, rb, rc

Prior to ARMv8, each instruction set of the entire ARM instruction set contained a 4-bit condition code, which must be met in accordance with "a condition". If the condition is true, execute this instruction and write back the result of the operation. Conversely, the instruction execution result is invalid or not executed.

Back to the origin, the advantages of conditional execution are obvious:

Accelerate the efficiency of actual conditional judgments because it is actually a comparison of 0 and 1 (Bitwise).

A branch that reduces the judgment of simple conditions can increase the potential for parallel execution of instructions. This is why many VLIW instruction sets generally support conditional execution. It even defines a special scratchpad that stores a Predicate to be judged in response to more complex and diverse conditions, such as a Software Pipeline that flattens the loop.

But why does ARM want to cancel seemingly perfect conditional execution?

It takes a lot of waste to use the 4-bit instruction code, so use Conditional Select instead.

For example: "CSEL W1, W2, W3, Cond", if the conditions are met, the W2 register data is moved to W1, if not, W3 to W1. The downside is that it will increase the code size slightly, but it's definitely worth it.

To improve the complexity of creating a high-performance non-sequential instruction execution engine, it is necessary to "pre-lock" the relevant resources needed at the front end of the pipeline, and also increase the number of registers that need to be renamed later, which is more unfavorable for improving the clock.

A11 "very likely" is a pure 64-bit microarchitecture

It is a commercial "asset" to ensure that the processor correctly executes the instruction set backtracking compatibility of all software, but it is also a "package" for designing the processor microarchitecture.

We have very good reason to believe that Apple is eager to drive away the "32-bit low-end application", just for its processor to pave the way for 64-bit optimization, and the A11 is so amazing performance, except it It's a pure 64-bit processor, and all of the transistor budgets are on the edge of improved performance. There is no other reasonable explanation (new heterogeneous multiprocessor schedules have an impact, but not so absolute). "Even if the A11 has 32-bit compatibility, its performance is probably only better than nothing.

Coincidentally, Qualcomm's Centriq 2400, which attempts to attack the server market, is also a pure 64-bit design. This is the result that ARM hopes to see when it develops a 64-bit instruction set extension: the high-performance products that have mushroomed.

Adding in the same field: the possibility of Mac switching to its own chip

Regarding this issue of "yearbook" (publishing a new iPhone every year), the author will not gamble on the grandfather's reputation as an irresponsible inference, but only leave two questions for the reader to think about:

Can Apple bear the cost of the transfer, especially when Mac users are already in a vulnerable group.

Whether Apple still wants to "absorb" users of Windows PCs.

Whether or not the Mac is switched to Apple's own chip is not just a matter of "effectiveness". It can be easily taken by a big question. Please consider the factors at the commercial level.

Apple also masters the "unfair competition" of hardware and software.

Finally, repost the answer to the title of this article:

"By firmly grasping the "closed" nature of the hardware and software platform, Apple has grasped the opportunity brought by the ARM instruction set to 64-bit, creating a series of advanced micro-architecture that can effectively process more instructions at the same time. "

The absolute advantage of this "integration" will be even more unbreakable on the road of deep learning in the foreseeable future. This is Apple's fruitful results when it comes to the future of the iPhone, and even if you don't Like "fruit powder", you can't help but admire Jobs' vision.

As for what PA Semi has done, it is worthwhile for Apple to take risks. When you have a chance, let's talk about it if you really have a chance.

Product categories of Universal Stylus Pen, We are Specialized Stylus Pen manufacturers from China, The Universal Stylus Pen can be worked on android phones / apple phones and all brands capacitive touch screens. We have perfect after-sale service and technical support. Looking forward to your cooperation.

Phone Stylus Pen,Touchscreen Stylus Pen,Stylus Pen For Android,Tablet Stylus Pen

Shenzhen Ruidian Technology CO., Ltd , https://www.wisonen.com