How did the unmanned car learn to drive step by step?

Similar to humans using both eyes to observe the road surface and to manipulate the steering wheel by hand, the unmanned vehicle uses a row of cameras to sense the environment and uses a deep learning model to guide the driving. In general, this process is divided into five steps:

Record environmental data

Analyze and process data

Building a model that understands the environment

Training model

Refine models that can be improved over time

If you want to understand the principles of unmanned cars, then this article should not be missed.

Record environmental data

An unmanned vehicle first needs the ability to record environmental data.

Specifically, our goal is to get an even distribution of the left and right steering angles. This is not difficult to operate and can be achieved in a clockwise and counterclockwise manner around the test site. This kind of training helps to reduce the steering deviation and avoid the car drifting from the side of the road to the embarrassing situation on the other side of the road after driving for a long time.

In addition, driving at a slow speed (for example, 10 miles per hour) also helps to record a smooth steering angle when cornering, where driving behavior is classified as:

Straight line: 0<=X<0.2

Small turn: 0.2<=X<0.4

Sharp turn: X>=0.4

Return to the center

Where X is the steering angle, r is the radius of rotation (in meters), and the formula for calculating the steering angle is X=1/r. The “recovery to the center†mentioned above is very important in the data recording process. It helps the vehicle learn to return to the center of the lane when it hits the road. These record data is stored in driving_log.csv, where each line contains:

File path to the camera in front of the lens

File path to front left camera image

File path to front right camera image

Steering angle

In the process of recording environmental data, we need to record an image of about 100,000 steering angles in order to provide sufficient data training model to avoid over-fitting due to insufficient sample data. By plotting the steering angle histogram periodically during data recording, it is possible to check whether the steering angle is a symmetric distribution.

Analyze processing data

The second step is to analyze and prepare the data just recorded for the build model. The goal at this point is to generate more training samples for the model.

The image below is taken by a front central camera with a resolution of 320*160 pixels and contains red, green and blue channels. In Python, you can represent it as a three-dimensional array where each pixel value ranges from 0 to 255.

The area below the driver's line of sight and the lane markings on both sides have been the focus of research in autonomous driving. These two parts can be cropped using Cropping2D in Keras to reduce the noise input into the model.

We can use the open source computer vision library OpenCV to read the image from the file and then flip it along the vertical axis to generate a new sample. OpenCV is ideal for autonomous car use cases because it is written in C++. Other image enhancement techniques like tilt and rotation also help generate more training samples.

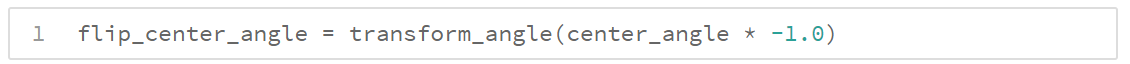

In addition, it is necessary to flip its steering angle by multiplying by -1.0.

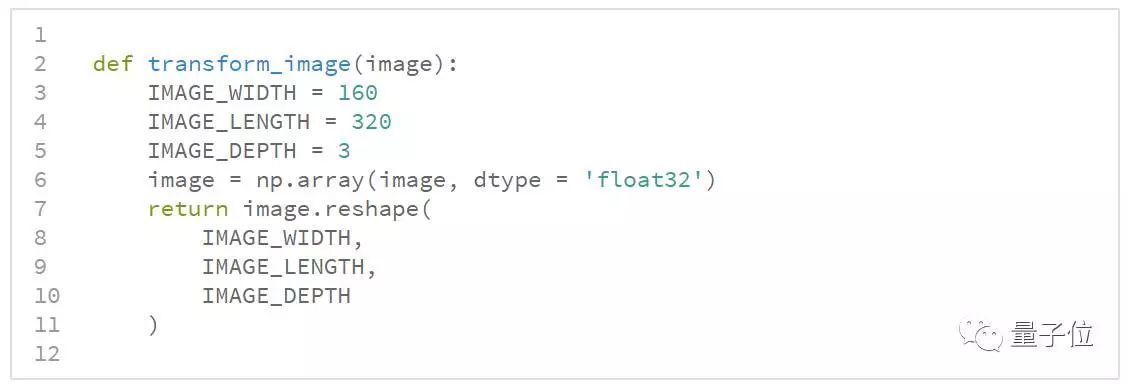

After that, you can use the Numpy open source library to reshape the image into a three-dimensional array for easy modeling.

Building a model that understands the environment

After the image data is fixed, we need to build a deep learning model for the unmanned vehicle to understand the environmental information, and extract features from the recorded images.

Specifically, our goal is to map an input image containing 153,600 pixels to an output containing a single floating point value. Each layer of NVIDIA's previous model provides specific functionality and should work well as an infrastructure.

NVIDIA model related paper address:

Https://arxiv.org/pdf/1604.07316v1.pdf

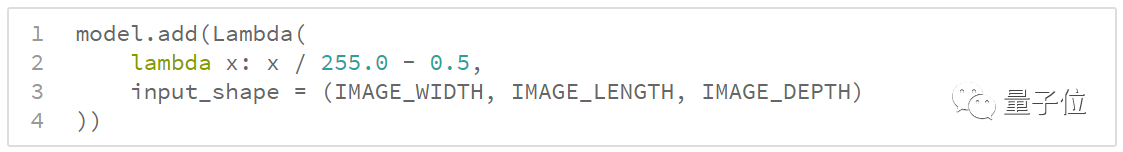

After that, we need to normalize the 3D array to unit length to prevent large value deviations in the model. Note that we divide it by 255.0 because this is the maximum possible value for a pixel.

It is also necessary to appropriately reduce the pixels in front of the scene and the image of the image in front of the car to reduce noise.

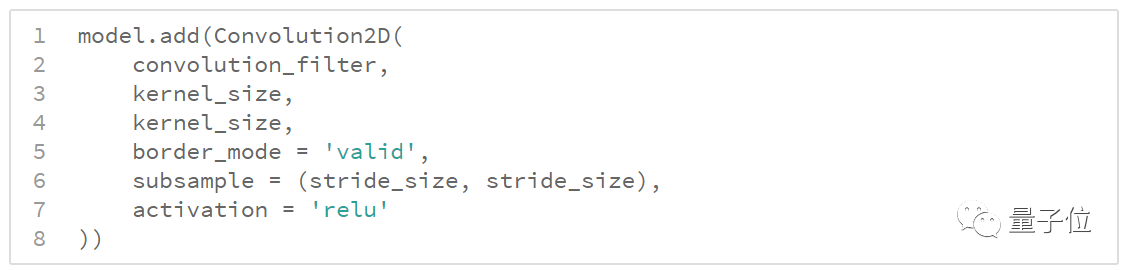

After that, we need to convolve three-dimensional arrays such as lane markers to extract key features that are critical to predicting the steering angle.

We want the developed model to be able to navigate any road type, so we need to use dropout to reduce overfitting.

Finally, we need to output the steering angle as a float.

Training model

After building the model, we need to train the model to learn to drive.

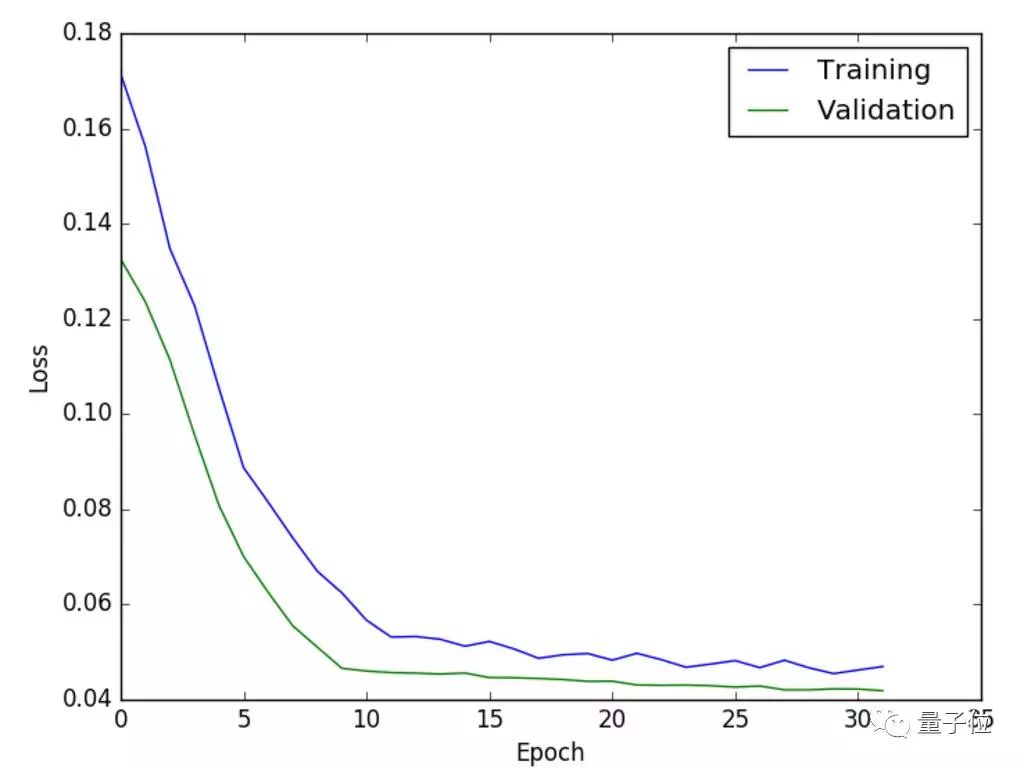

From a technical point of view, the goal at this stage is to predict the steering angle as accurately as possible. Here, we define the loss as the mean square error between the predicted and actual steering angles.

Samples are randomly sampled from driving_log.csv to reduce sequence bias.

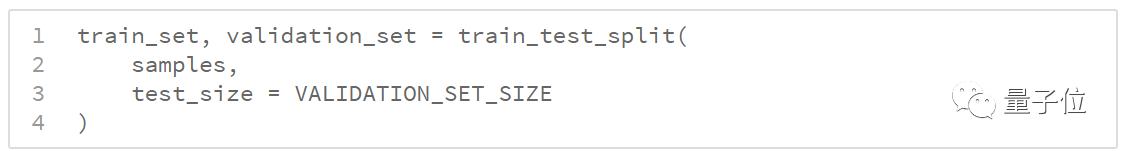

You can set 80% of the sample as the training set and 20% as the validation set so that we can see how accurate the model is in predicting the steering angle.

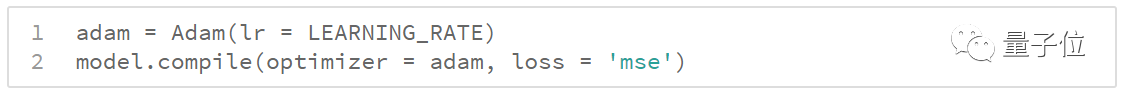

After that, you need to minimize the average squared error with Adam (adaptive moment estimation). Compared with the gradient descent, Adam's big advantage is that it borrows the concept of momentum in physics to converge to the global optimal value.

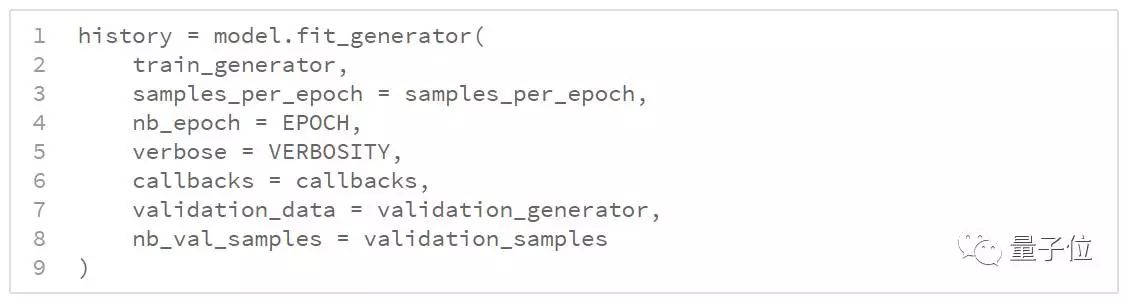

Finally, we use the generator to adapt to the model. Due to the large number of images, we cannot enter the entire training set into the in-house training at one time. Therefore, it is necessary to mass produce images with the generator for training.

Refine the model over time

Perfecting the model is our final step, and we need to make the accuracy and robustness of the model increase over time. Our experiments used different architectures and hyperparameters to observe their effect on reducing mean square error. What kind of model is best? Sorry, there is no single answer to this question, because most improvements require sacrifices for other things, such as:

Reduce training time with a better graphics processing unit (GPU), paying attention to this and increasing costs

Reduce learning speed by reducing training time and increase the probability of optimal value convergence

By using grayscale images to reduce training time, you need to be aware that this will lose the color information provided by the red, green, and blue channels.

Improve the accuracy of gradient estimation with a larger batch size, at the expense of memory used

A large number of samples are used at each stage to reduce the fluctuation of losses

The full text of the overview, in fact, can be found that the process of autonomous vehicle development is also the process of understanding the advantages and limitations of computer vision and deep learning.

I firmly believe that the future belongs to autonomous driving.

Led Tv Led Display,Led Tv Screens,Crystal Led Display,Lcd Led

ShenZhen Megagem Tech Co.,Ltd , https://www.megleddisplay.com