Internet companies face the problem of dealing with large-scale machine learning applications every day, so we need a distributed system that can handle this very large-scale daily task. Recently, the Deep Forest algorithm, which uses an integrated tree as a building block, has been proposed and has achieved a highly competitive effect in various fields. However, the performance of this algorithm has not yet been tested in very large-scale tasks. Recently, based on the ant gold server's parameter server system “Zhenpeng†and its artificial intelligence platform “PAIâ€, Ant Financial and the research team of Professor Zhou Zhihua of Nanjing University have developed a distributed deep forest algorithm and provided an easy Use a graphical user interface (GUI).

In order to meet the real-world mission requirements, Zhou Zhihua team made many improvements to the original in-depth forest model. For hyperscale tasks such as automatic detection of cash-out fraud (with more than 100 million training samples), the researchers tested the performance of the deep forest model. The experimental results show that under different evaluation criteria, only by fine-tuning the parameters of the model, the deep forest model can achieve the best performance in large-scale task processing, thus effectively preventing a large number of cashing frauds. Even if compared with other best models that have been deployed so far, the deep forest model can still significantly reduce economic losses.

Introduction

For financial companies such as ant finance, cashing fraud is one of the common hazards. The buyer pays for the transaction with the seller through the ant credit service issued by Ant Financial and obtains cash from the seller. Without proper fraud detection methods, the daily scammers can obtain a large amount of cash from cashing out fraud, which poses a serious threat to online credit. Currently, machine learning-based detection methods, such as Logistic Regression (LR) and Multiple Additive Regression Trees (MART), can prevent this fraud to a certain extent, but we need more effective methods because any minor improvements are It will significantly reduce economic losses. On the other hand, with the increasing effectiveness of data-driven machine learning models, data scientists often work closely with the product department to design and deploy effective statistical models for these tasks. For data scientists and machine learning engineers, they want to handle large-scale learning tasks (often millions or billions of training samples) through an ideal high-performance platform. In addition, the process of building this platform is simple and it can run different tasks to increase productivity.

Tree-based models, such as random forests and multiple weighted regression tree models, are still one of the main methods for various tasks. Due to the superior performance of this model, most of the winners in the Kaggle contest or data science project also use the integrated ensemble additive tree model (ensemble MART) or its variant structure. Due to the sparseness and high dimensionality of financial data, we need to consider it as a discrete modeling or hybrid modeling problem. Therefore, a model such as deep neural network structure is not suitable for the daily work of a company like ant finance.

Recently, Zhou Zhihua's research team proposed an in-depth forest algorithm, which is a new deep structure that does not require differential solution and is particularly suitable for tree structures. Compared to other non-deep neural network models, the deep forest algorithm can achieve the best performance; and compared to the current best deep neural network model, it can achieve highly competitive results. In addition, the number of layers of the deep forest model and its model complexity can be adapted to specific data, and the number of hyperparameters is much less than that of the deep neural network model and can be regarded as an excellent alternative to some ready-made classifiers.

In the real world, many tasks contain discrete features. When using a deep neural network to model, dealing with these discrete features will become a thorny problem because we need to explicitly or implicitly convert the discrete information continuously. , but such conversion process usually leads to extra deviation or loss of information. The deep forest model based on the tree structure can handle this data type problem very well. In this work, we implemented and deployed the in-depth forest model on the distributed learning system “Zhenpengâ€, which is the first industrial practice of the distributed deep forest model on the parameter server and can handle millions of high-dimensional data. .

In addition, on the artificial intelligence platform of Ant Financial, we also designed a web-based graphical user interface that allows data scientists to freely use deep forest models by simply dragging and clicking without any coding process. This will facilitate the work of data scientists and make the process of building and evaluating models very efficient and convenient.

Our main contributions in this work can be summarized as follows:

Based on the existing distributed system, “Zhenpengâ€, we implemented and deployed the first distributed deep forest model and built an easy-to-use graphical interface for our artificial intelligence platform PAI.

We have made many improvements to the original deep forest model, including the efficiency and effectiveness of MART as a basic learner, such as cost-based processing methods for class-unbalanced data, high-dimensional data feature selection based on MART, and different cascading levels. Assess the automatic determination of indicators and other tasks.

We verified the performance of the deep forest model on the automatic detection of fraud. The results show that under different assessment indicators, the performance of the in-depth forest model is significantly better than all existing methods. More importantly, the robustness of the deep forest model has also been verified in experiments.

System Introduction

Hao Peng system

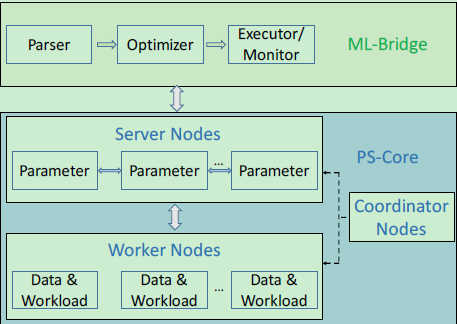

Hao Peng is a distributed learning system based on parameter servers, which is mainly used to handle large-scale tasks that occur in the industry. As a production-level distributed parameter server, Kunpeng system has the following advantages: (1) powerful failover mechanism to ensure high success rate for large-scale work; (2) efficient interface for sparse data and general communication; ( 3) User-friendly C++ and Python system development tools (SDKs). The structure diagram is shown in Figure 1 below:

Figure 1: Simplified structure diagram, including ML-Bridge, PS-Core section. The user can operate freely on the ML-Bridge.

Distributed MART

The Multivariate Weighted Regression Tree Model (MART), also known as Gradient Boosting Decision Tree Model (GBDT) or Gradient Boosting Machine Model (GBM), is a machine learning algorithm widely used in academic and industrial fields. Thanks to its efficient and excellent model interpretability, in this work we deployed MART in a distributed system as an essential part of a distributed deep forest model. In addition, we have also combined other tree structure models to further develop a distributed version of the deep forest model.

Depth forest model structure

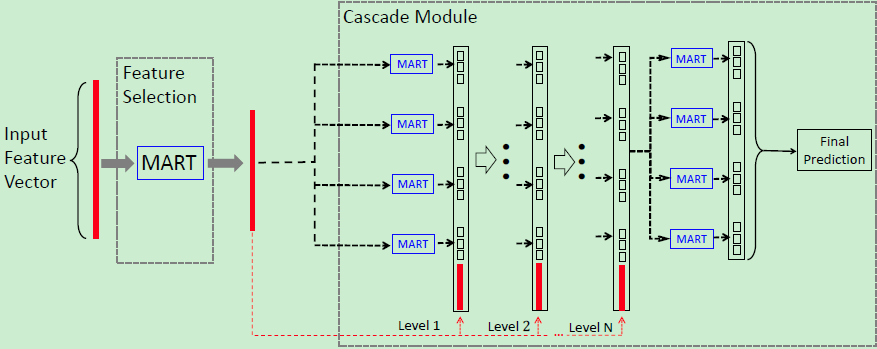

The deep forest model is a recently proposed deep learning framework with an integrated tree as a building block. Its original version consists of a ne-grained module and a cascading module. In this work, we deprecated the ne-grained module and established a multi-tiered cascade module. Each layer consists of several basic random forests or completely random forest modules. Its structure is shown in Figure 2 below. For each basic module, the input is a combination of the class vector generated by the previous layer and the original input data, and then the output of each basic module is combined to get the final output. In addition, K-fold verification is performed for each layer. When the accuracy of the verification set is not improved, the cascading process is automatically terminated.

Figure 2: Depth forest model structure

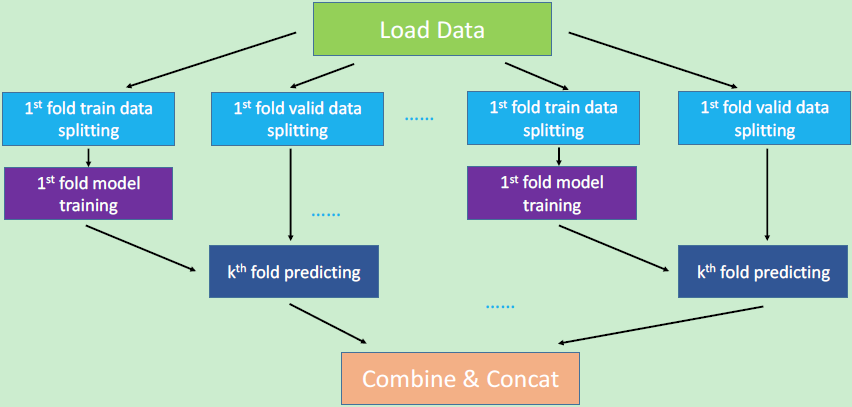

For the general work deployment strategy, the model training module can only start work after all the data preparation work is completed, and the model test module can only begin to predict after all the models have been trained successfully, which significantly reduces the work efficiency of the system. Therefore, on distributed systems, we use Directed Acyclic Graph (DAG) to improve the efficiency of system operations. A directed acyclic graph, as its name implies, is a directed graph without a directed loop. Its structure is shown in Figure 3 below.

Figure 3: Job scheduling of directed acyclic graphs. Each rectangle represents a process. Only processes that are related to each other can connect to each other.

We consider a node in the diagram as a process and only connect processes that are related to each other. The prerequisite for two related nodes is the output of one node as the input of another node. Only when all the prerequisites for a node are satisfied will another node be executed. Each node is executed separately, which means that if one node fails, it does not affect the subsequent other nodes. In this way, the waiting time of the system will be significantly shortened, because each node only needs to wait for the execution of the corresponding node. More importantly, such a system design provides a better solution for failover. For example, when a node causes a crash for some reason, then as long as its preconditions are satisfied, we can start running from this node again without having to run the entire algorithm from scratch.

Graphical user interface (GUI)

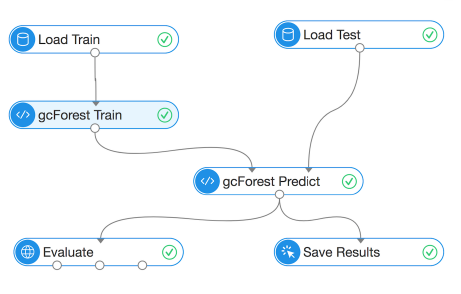

How to effectively build and evaluate model performance is crucial to increase productivity. To solve this problem, we have developed a graphical user interface (GUI) on the artificial intelligence platform PAI of Ant Financial.

Figure 4 below shows the GUI of the deep forest model. The arrows indicate the sequence correlation between data streams. Each node represents an operation, including loading data, building a model, and predicting a model. For example, all details of a deep forest model are encapsulated into a single node. We only need to specify which base module to use, the number of each layer in the module, and some other basic configuration. The default base module here is the previously mentioned MART. Therefore, the user can quickly create a deep forest model in a few minutes with only a few mouse clicks, and get the evaluation result after the model training is finished.

Figure 4: The GUI interface of the deep forest model on the PAI platform, with each node representing an operation.

â–ŒExperimental application

data preparation

We verify the performance of the deep forest model on the automatic detection of cash payment fraud. For this inspection task, what we need to do to detect the potential risk of fraud is to avoid unnecessary economic losses. We regard this task as a binary classification problem and collect the original information in four aspects, including the seller characteristics and buyer characteristics that describe the identity information, and describe the transaction characteristics and historical transaction characteristics of the transaction information. In this way, whenever a transaction takes place, we can collect more than 5,000 dimensions of data features that contain numerical and categorical features.

In order to build a training and test data set for the model, we use the ant credit payment user data for consecutive months in the O2O transaction to sample training data, and use the data from the same scenario in the next few months as test data. .

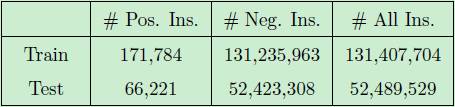

The details of the data set are shown in Table 1 below. This is a large-scale and unbalanced task. As we mentioned earlier, the original data dimension collected is up to 5,000 dimensions, which may contain some unrelated feature attributes. If used directly, the entire training process will be very time-consuming and will also reduce the efficiency of model deployment. Therefore, we use the MART model to calculate and select the features we need.

Specifically, first we use the features of all dimensions to train the MART model and then calculate the importance scores of the features to select relatively important features. The experimental results show that using the features with high importance scores of the first 300 features, our model can achieve quite competitive performance, and the redundancy of features is further proved in the verification process. Therefore, we use the feature importance score to filter the original features and retain the first 300 features needed for our model training.

Table 1: Data sample sizes for training and test sets

â–ŒExperimental results analysis

We tested the performance of distributed deep forest models under different evaluation criteria and discussed specific analysis results.

General evaluation criteria

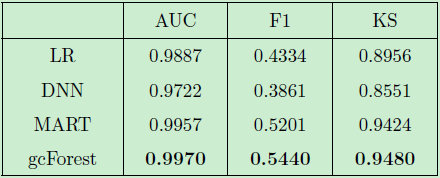

Under common assessment criteria, including AUC scores, F1 scores, and KS scores, we compared Logistic Regression Model (LR), Deep Neural Networks (DNN), Multiple Weighted Regression Tree Models (MART), and our in-depth forest models ( Performance of gcForest). The results are shown in Table 2 below:

Table 2: Experimental Comparison Results Under General Evaluation Criteria

Specific Evaluation Criteria (Recall)

For the recall ratio of positive samples, we compared the performance of the four methods. The results are shown in Table 3.

Table 3: Experimental comparison results under specific evaluation criteria.

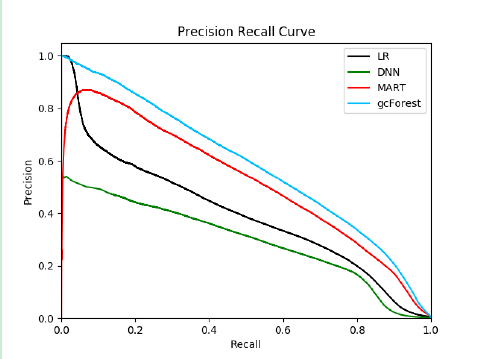

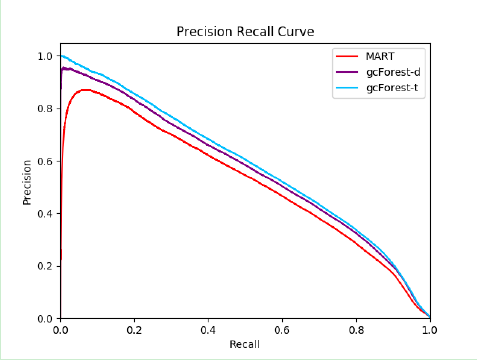

PR curve

To compare the detection performance of the four methods more intuitively, we plotted the Precision-Recall (PR) curve, as shown in Figure 5. We can clearly see that the PR curve of the deep forest model contains all other methods, which means that the detection performance of the deep forest model is much better than that of other methods, which further validates the effectiveness of the deep forest model.

Figure 5: PR Curves of LR, DNN, MART, and gcForest Models

Economic benefits

Under different evaluation criteria, we have analyzed the experimental results one by one and verified the effectiveness of the deep forest model for large-scale tasks. Compared with the previous best MART model (MART model consisting of 600 tree structures), the detection of cash fraud, the deep forest model (based on the MART model based module, only 200 trees per MART module Structures can bring more significant economic benefits with a simpler structure and greatly reduce economic losses.

Model robustness analysis

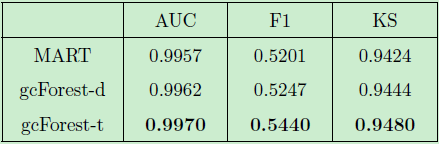

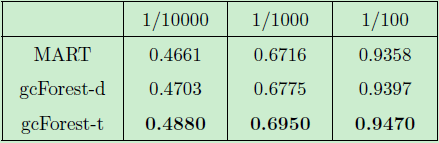

For the above evaluation criteria, we performed robust analysis on the different methods. The results are shown in Table 4, Table 5 and Figure 6, respectively corresponding to the general evaluation criteria, the specific evaluation criteria (Recall) and the PR curve. Bar analysis results. Where gcForest-d represents the deep forest model under default settings, and gcForest-t represents the fine-tuned deep forest model.

Table 4: Experimental Comparison Results Under Common Criteria (Robustness Analysis)

Table 5: Experimental Comparison Results for Specific Standards (Robustness Analysis)

Figure 6: gcForest-d with default settings, fine-tuned gcForest-t and PR curves for MART model

We can see that the performance of the gcForest-d model under the default settings is far better than that of the fine-tuned MART model, and the fine-tuned gcForest-t model can achieve better performance.

Linear Video Processing,Ic Linear Video Processing,Linear Video Processing Ic Chips,Linear Video Processing Ic Video

Shenzhen Kaixuanye Technology Co., Ltd. , https://www.iconlinekxys.com